There are domains in which input visual data to machine learning models are very large. Aerial and histopathological images are two to name a few. For either of the classification or regression supervision learning problems, one issue is how to deal with the large input data size. In these domains, the input sample is likely to reach 50,000×50,000 pixels. If you think GPUs will soon get to a memory capacity hitting that level, I would say you should probably need to revisit the growth of GPU memory size over the past 10 years.

People in the AI community therefore found some solutions to overcome the issue. You are right! Let’s patch out the largest possible image crop from the original image and then feed it into our model for the task. That is a working solution. The problem, though, is that the patch is not a good representative of the original data. The question is how do you decide where to patch the input from? Since the largest you could patch is a few orders of magnitude smaller than the original data, lots of invaluable data will be lost and the performance would not be as satisfactory as it must be.

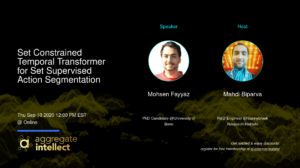

The first event at AISC that I am officially a Computer Vision stream owner and hosting an invited speaker was on a recent paper to deal with this issue. After passing a few milestones, I am glad I could grab the AISC organizing team to have me onboard. I am gradually learning how to reach out to people and organize events in an efficient manner. It takes time though. Voluntarily work is always challenging but the joy of sharing your knowledge and expertise with the community and learning back is always fascinating.

Let’s get back to the issue we were talking about. Since we cannot use the original input data as it is due to the memory and computation limitations, and single patching does not fully leverage what we have, creating a set of multiple patches sampled smartly across the large original data is what has gained momentum in the community. Instead of feeding models with a single patch, the core idea is to form a set consisting of various patches sampled from the original data and then feed the set into the model. One of the important characteristics of a set is to be permutation invariant. That means if set a = {1, 2, 3}, then set a = {2, 3, 1}. Max-pooling is a permutation-invariant operator.

I was happy to have Shivam Kalra invited to this amazing presentation. He is one of the authors of the EECV’20 paper of “Learning Permutation Invariant Representationsusing Memory Networks”. He very nicely motivates the problem, describes previous papers, and explains the proposed approach in this talk. Hierarchical deep representation is used as the backbone to encode input sets and memory modules with attention mechanisms is used to provide permutation-invariant formulation in the model.

If you are interested to read the paper and learn more details of the work, please click on this link. The information about the event is provided in this link. You can watch the YouTube video stream below. I am going to post the subsequent events in the future. So wait for more cool stuff to get published.